When I was studying Economics at school, I thought of myself as an economist. (I even put that down as my first choice of summer work when I was hoping not to actually be offered a job. My second choice was astronaut: I didn't get that either. I was offered a job as a sweeper in London Zoo. But I digress.)

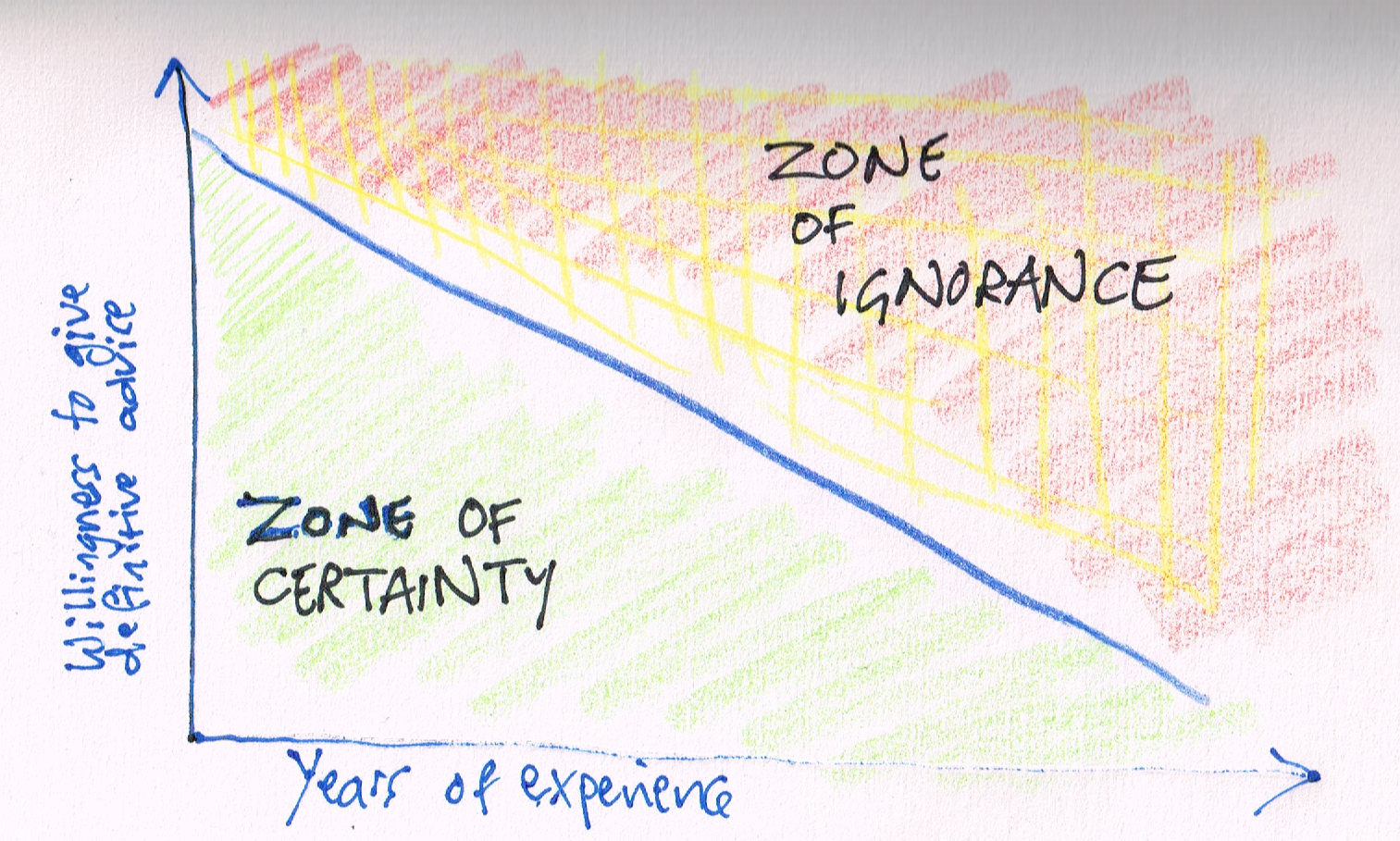

By the time I'd completed a degree in the subject, I was aware of the vast expanse of ignorance opening up before me.

In fact, academic education can be summarised by the observation that it involves knowing more and more about less and less -- until you reach PhD level, where you know everything about nothing!

On a practical level, it makes setting multiple choice test questions very difficult -- because really clued-up students will often realise that at least two answers are equally viable, depending on the assumptions you make.

I think in the realm of ICT and Computing, it becomes even more difficult to set up a situation in which you can know in advance what the correct answers are, especially if you set open-ended tasks.

Good students will suggest ways of solving a problem that may never have occurred to you. In closed tasks they may be at a loss as to what the correct answer is because they don't know what you had in mind.

For example, let's suppose you asked your students to create a situation in which their solution looks up a data entry, and then tries to match that with something else. You know, the sort of situation you would have when looking up a customer's records. Off the top of my head I can think of several ways of achieving this, and they all have their own advantages and disadvantages.

For instance, a bespoke program or a relational database would seem to be the most obvious options, but if there are only 100 records, say, then those approaches might be considered overkill. That would leave something like a simple database, or a spreadsheet (which in effect amounts to the same thing).

If a spreadsheet is decided upon, should IF functions be used, or the more elegant Lookup functions? Again, each has their own merits and drawbacks.

This is why, when it comes to assessing students' abilities, I don't much like rubrics. They are superficially attractive, but they tend to limit student choices, and are nowhere near as objective as they make themselves out to be.

What's the solution? I would not be so bold as to offer the solution, but one solution is to set a problem, or ask the students to identify a problem, and then let them solve it in whatever way they like. Then judge their solutions using your professional judgement -- see Professional judgement in assessing computing for further thoughts on this. Obviously, you would need to seek the opinions of other colleagues too if possible, just in case you're wrong -- that's why moderation is such a good idea.

But whatever you think of my suggestion, I think the problem itself is worth thinking about. After all, you could end up in the situation in which the student who is the best in the class at ICT or Computing is displaying the most ignorance, just because your assessment tasks aren't really suitable.